Make Your Value Stream Visible Through Structured Logging

Looking at software development before the cloud revolution, using logging frameworks was kind of common. They supported the dev in formatting the logs, buffering them and sending them to the correct sink such as Elasticsearch. With the rise of cloud platforms applications tended to get smaller units and direct integrations of cloud applications into observability or log archive platforms is now possible with a few lines of configuration. It's time to review if using logging frameworks pays off or a simple 'console.log' and forward the application/function output to some provider such as datadog will do the job.

The direct comparison

Let's have a view on usual requirements a developer has on the logging facility. Loggers should be available everywhere in the code, either you want to log specific infos deep in the code stack or log unhandled exceptions on the top entry point. As a log message might not be specific enough to understand the context in which it was written, you will enrich it with additional metadata describing a current snapshot of the context. This leads to the next point: once you want to serialize full objects you need to take care of removing sensitive information like tokens. It is up to you deciding how useful it is to log the whole object. Once you are working in a system under load, there will be a high volume of log messages produced, so the key essence of logging is the search-ability and filterability of messages afterwards.

Console.log

Structured logging

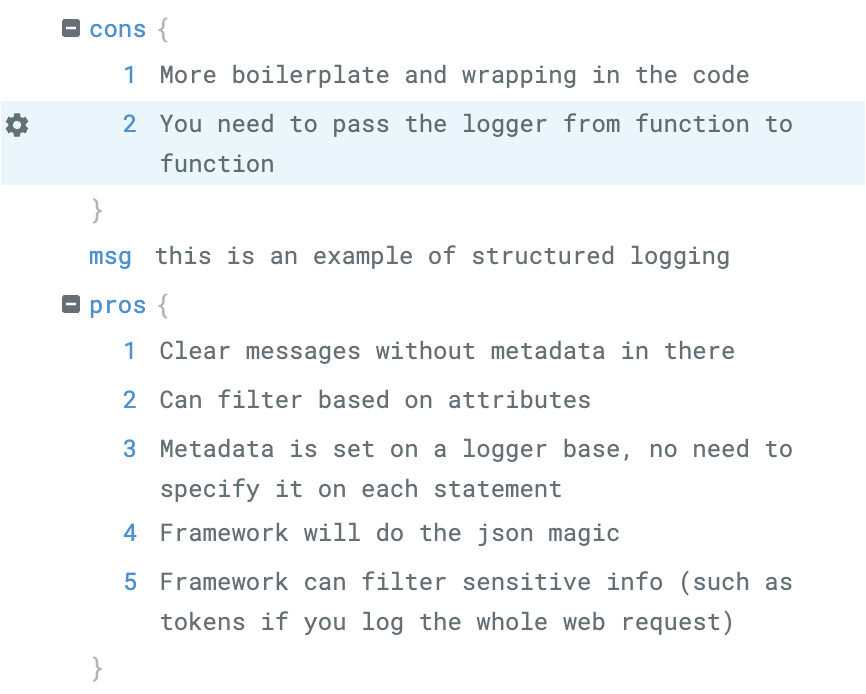

The examples above shows the visual difference between the 2 approaches.

'console.log' writes all information into the message part whereas the structured logger keeps the message clean and appends additional metadata to the attributes. Let's have a more detailed view on the characteristics:

Setting up a Structured Logger

First, find a logging framework for your programming language. In our TypeScript application we use Winston:

Initializing a logger

const logger: WinstonLogger = createLogger({

levels: {

error: 0,

warn: 1,

info: 2,

debug: 3,

},

level: process.env.LOG_LEVEL ?? 'info',

transports: new transports.Console({

format: format.combine(

format((info) => {

filterProps(info, ['Authorization', 'X-On-Behalf-Of']);

return info;

})(),

format.errors({ stack: true }),

format.timestamp({ format: 'YYYY-MM-DD HH:mm:ss.SSS Z' }),

format.prettyPrint(),

format.json()

),

}),

exitOnError: false,

});

We define a console transport and its format, filtering sensitive properties such as 'Authorization' and 'X-On-Behalf-Of'.

Next we need a factory to create a specific logger for our entry points such as lambda functions

Logger factory

export const loggerFactory = (moduleName: string, meta: Record<string, unknown> = {}): Logger => {

const correlationId = uuidv4();

const metaState = {

correlationId,

...meta,

};

const child = logger.child({

moduleName,

...metaState,

});

return new LoggerImpl(child, metaState);

};

Hand over common metadata records at creation.

Common Metadata will be used for all log messages, automatically setting a correlation id and wrapping the logger into a logger implementation, that tracks the current common metadata for the context.

Logger Implementation

export interface Logger {

extendMeta(meta: Record<string, unknown>): Logger;

childWithMeta(meta: Record<string, unknown>): Logger;

error(message: string, optionalParams?: Record<string, unknown>): void;

errorWith(message: string, key: string, data: unknown): void;

errorException(error: unknown, optionalParams?: Record<string, unknown>): void;

errorExceptionWithMessage(

message: string,

exception: unknown,

optionalParams?: Record<string, unknown>

): void;

warn(message: string, optionalParams?: Record<string, unknown>): void;

warnWith(message: string, key: string, data: unknown): void;

info(message: string, optionalParams?: Record<string, unknown>): void;

infoWith(message: string, key: string, data: unknown): void;

debug(message: string, optionalParams?: Record<string, unknown>): void;

}

export class LoggerImpl implements Logger {

constructor(

private logger: WinstonLogger,

private metadata: Record<string, any> = {}

) {

this.logger = logger;

this.metadata = metadata;

}

// will create a new child logger with additional meta

childWithMeta(meta: Record<string, unknown> = {}): Logger {

const newMeta = { ...this.metadata, ...meta };

const childLogger = childLoggerFactory(this.logger, newMeta);

return new LoggerImpl(childLogger, newMeta);

}

// will extend the current logger with metadata

extendMeta(meta: Record<string, unknown> = {}): Logger {

this.metadata = { ...this.metadata, ...meta };

this.logger = childLoggerFactory(this.logger, this.metadata);

return this;

}

Every 'info', 'warn', etc. message provides a second 'optionalParams' record where you can pass metadata relevant for this single log message. E.g. you sent a webrequest and you want to log the result status code.

At some place you might generate additional metadata, such as once created an asset you get an assetID. The extendMeta function will take this new meta and all further requests will log this assetID.

In branch out situations or in loop processing it makes sense to create a new child logger based on the current logger. Imagine you have initialized a logger and then you want to batch process Kafka events. You can create a child logger with an own metadata context based on the current logger. See image 1.

Where do I create my logger?

Generally you will create it in the outermost layer. In our case we have written a decorator which wraps the lambda call, initializes the logger with basic metadata and catches unhandled exceptions. So every exception keeps the common metadata. The logger is handed over to each inner layers of the function. In case of Kotlin/Java you could use a the logger factory to inject a logger for your context. In the example below you see how the logger gets more specific over time. Imagine you are handling Kafka records. For each record you are processing you create a new child logger setting common metadata such as an asset Id. Any unhandled exception will be processed in the log decorator and you still keep the basic log context of the topmost logger such as correlation id which helps to allocate the context for a logged exception (e.g. give me all log statements related to this error message).

Image 1 - Lifetime of base and child loggers, their usage (blue) and their common properties (green)

Catch up

In this post we have compared structured logging with 'console.log'. If you are using a cloud environment connected to your observability environment, your functions are small and correlation of log messages is not a requirement, 'console.log' might suffice. In more complex scenarios, where you write multiple messages per second and log messages are correlated as they span multiple workflow steps, structured logging is a better option.

Using this approach we were able to span an end 2 end dashboard over multiple independent services and applications in our value stream to visualize most important steps an entity will go through.